DualCLIPLoader

The DualCLIPLoader node is here to settle the age-old argument of which CLIP is better — by just using both. This node lets you load two CLIP models simultaneously, giving your workflow extra perspective, better text-vision understanding, and more power than a single CLIP could ever offer alone.

Whether you're running SDXL, SD3, Flux, or even Hunyuan Video, this node helps unlock the full potential of multi-CLIP workflows in ComfyUI.

🔧 Node Type

DualCLIPLoader

Outputs: CLIP

📁 Function in ComfyUI Workflows

The DualCLIPLoader node loads two distinct CLIP models into a single combined output object, which downstream nodes (like CLIPTextEncode, KSampler, and others) can use for better prompt conditioning. It’s especially useful in workflows where you're combining:

- Vision + text encoders (e.g., SDXL-style)

- Two stylistically different CLIPs (like realism and anime)

- CLIPs trained on different languages or datasets

- Experimental comparative generation setups

It’s a smart choice when you want more control, more nuance, and less guesswork in how your prompt is interpreted.

🧠 Technical Details

This node:

- Loads two

.safetensorsCLIP models - Outputs a combined CLIP object

- Supports architecture-specific types (

sdxl,sd3,flux,hunyuan_video) - Can optionally be assigned to specific devices (like CPU or

cuda:0) for advanced load balancing

Internally, the node makes sure both models are loaded properly and tied to your workflow’s current recipe.

⚙️ Settings and Parameters

| 🔲 Field | 💬 Description |

|---|---|

clip_name1 | Filename of the first CLIP model. This is your primary encoder, typically used for prompt conditioning. Must be a valid .safetensors CLIP file in your models folder. Example: clip_l.safetensors. |

clip_name2 | Filename of the second CLIP model. Used in tandem with the first — often a vision encoder or stylistic variant. Example: clip_vision_g.safetensors. |

type | Model recipe this CLIP pair is being used with. Must match the type of your checkpoint and downstream pipeline. Options include: sdxl, sd3, flux, hunyuan_video. |

device | (Optional) The device you want to load the CLIP models onto. Use "default" for automatic assignment (usually GPU), or set to "cpu" or "cuda:0" if you’re managing memory manually. |

✅ Benefits

- Multi-modal power – Combine language and vision understanding in one pass.

- Style fusion – Blend the strengths of two different CLIPs (realism + anime, anyone?).

- More accurate prompts – Dual interpretation gives better grounding to both positive and negative prompts.

- Better SDXL/SD3 results – These architectures were made for dual-CLIP setups, and this node is the plug-in brain for them.

⚙️ Usage Tips

- Always match the

typefield with the checkpoint type you're using. Don’t mixsdxlwithfluxor your generation will go sideways. - If you’re running low on VRAM, offload one model to

cpuby settingdeviceto"cpu"— but expect slower performance. - You can mix a vision CLIP with a language-focused CLIP to create crazy accurate visual storytelling. Try it with SDXL for best results.

- Want to experiment with prompting style? Use one CLIP trained for realism, and another trained for fantasy — balance prompt weights accordingly.

📍 ComfyUI Setup Instructions

- Place your CLIP model files (

.safetensors) in your ComfyUImodels/clipfolder. - Add the DualCLIPLoader node to your workflow.

- Set

clip_name1andclip_name2to the exact filenames. - Set the

typefield to match your pipeline (sdxl,flux, etc.). - Optionally assign a

device(or just leave it as"default"). - Connect the output to any node that expects a

CLIPinput.

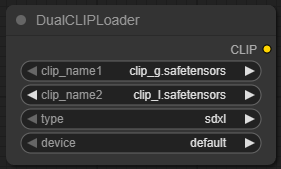

📎 Example Node Configuration

clip_name1: clip_l.safetensors clip_name2: clip_vision_g.safetensors type: sdxl device: default

In this setup, we’re using two different CLIPs with an SDXL-based model. This is ideal for workflows where SDXL’s text+vision conditioning is leveraged for higher fidelity generations.

🔥 What-Not-To-Do-Unless-You-Want-a-Fire

- ❌ Don’t mix model types. Your

typemust match your checkpoint or you’ll get garbage output (or no output at all). - ❌ Don’t assign both CLIPs to GPU on low-VRAM systems. You will crash.

- ❌ Don’t try to load non-CLIP

.safetensorsfiles here. It won’t work. You’ll sit there wondering why your workflow’s frozen. - ❌ Don’t assume CLIPs “merge” into a single model — this node runs them in parallel, not as a fusion.

- ❌ Don’t use massive CLIPs on a 4GB GPU unless you really enjoy watching your system swap memory like it’s 2006.

⚠️ Known Issues

- VRAM hungry – Two models = more memory. No surprise here.

- Slow on CPU – If you offload to CPU, expect a noticeable slowdown.

- No CLIP validation – If your filename is wrong, the node won’t tell you nicely — it’ll just break silently or downstream.

📚 Additional Resources

- How SDXL Uses Dual CLIP Architecture

- Example model files:

clip_l.safetensorsclip_vision_g.safetensors

📝 Notes

- This node is highly recommended for SDXL workflows and is borderline required if you want to use SDXL the way it was meant to be used.

- Great for prompt tuning, multimodal alignment, and style fusion.

- If you're experimenting with new CLIPs, try this node in a sandbox workflow before plugging it into a production chain.