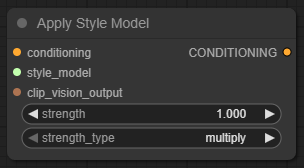

Apply Style Model

Welcome to the secret sauce of stylistic coherence. The Apply Style Model node (internally referred to as StyleModelApply) takes your boring, unflavored conditioning and injects it with a concentrated blast of artistic flair using a reference image and a pre-trained style model.

So if you’ve ever thought, “This image looks cool, but can it look more like that?”, this is the node for you.

📦 What This Node Does

The Apply Style Model node enhances your conditioning data by applying a style model derived from a CLIP-encoded reference image. It doesn’t touch your prompt directly—it influences it. Think of it as whispering a visual moodboard into the AI’s ear before it starts painting.

By injecting style information into the conditioning stream, you get generations that feel more cohesive, more on-brand, and—let’s be real—just plain better.

🔌 Inputs

| Name | Type | Description |

|---|---|---|

conditioning | Conditioning | The base prompt conditioning. This is your control signal for the AI, before any styling is applied. |

style_model | Style Model | The pre-trained style model (.ckpt or .safetensors) that knows what "style" looks like. Must contain a style_embedding. |

clip_vision_output | CLIP Vision Output | Output from a CLIP Vision encoder node. This is the style reference image, encoded into a format the style model understands. |

🎨 Outputs

| Name | Type | Description |

|---|---|---|

conditioning | Conditioning | Your original conditioning, now dressed in its Sunday best. Stylized and ready for sampling. |

⚙️ Settings & Parameters (Explained)

Let’s break this down:

conditioning

This is the raw, unstyled conditioning information from your prompt or prior conditioning nodes. It’s the “what” of your generation, and this node helps shape the “how.”

- ✅ Required? Yes

- 📌 Comes from: A Prompt node, Style Conditioning node, or similar.

- 🔍 Why it matters: This is the foundation. If it’s weak, inconsistent, or noisy, styling it won’t help.

style_model

A pre-trained style embedding file. This is what transforms your vanilla conditioning into something worthy of a portfolio.

- ✅ Required? Yes

- 📁 Must contain: a

style_embeddingkey - 🧠 Think of it as: A compressed stylistic fingerprint trained from visual data

- ⚠️ If you get an error about a missing key, your file is probably not a real style model.

clip_vision_output

Output from a CLIP Vision Encode node. Represents a style image as an embedding vector that your style model can digest.

- ✅ Required? Absolutely

- 🎯 Purpose: This tells the style model which style to apply from its learned embedding space.

- 🖼️ Best practice: Use a clean, high-res image that strongly reflects the style you want to transfer.

🧠 Recommended Use Cases

- Applying the style of a reference image to generations across a series

- Maintaining consistent visual tone in a multi-image workflow

- Stylizing based on actual visual moodboards rather than 10 paragraphs of prompt copypasta

- Generating variations of a concept while preserving artistic identity

🔁 Example Workflow Setup

- 🖼️ Load an image and encode it with

CLIP Vision Encode - 🧠 Load your

Style Modelusing theLoad Style Modelnode - 📝 Create prompt conditioning (text prompt → conditioning)

- 🎨 Apply the style with

Apply Style Model - 🔄 Feed the new

conditioninginto a sampler likeKSamplerand render your styled image

💡 Prompting Tips

- Let the style model do the heavy lifting for aesthetic—don’t overcompensate with excessive prompt descriptors.

- Want a touch of style instead of full commitment? Consider blending styled and unstylized conditioning.

- Use consistent reference images if you’re going for a themed batch—AI doesn’t do nuance unless you spoon-feed it.

🧯 What-Not-To-Do-Unless-You-Want-A-Fire

- ❌ Don’t feed in a style model that’s not actually a style model (missing

style_embedding) - ❌ Don’t mismatch your

clip_vision_outputand your style model—the results will be ugly or broken (or both) - ❌ Don’t pass a

Noneor brokenclip_vision_outputor you’ll meet the dreaded'NoneType' has no attribute 'flatten'error - ❌ Don’t expect miracles if your base

conditioningis garbage. Style can’t polish a turd (though it will try)

🧱 Common Errors & Fixes

| Error Message | Translation | Fix |

|---|---|---|

invalid style model <ckpt_path> | Your style model is missing a style_embedding | Use a proper style model file |

AttributeError: 'NoneType' object has no attribute 'flatten' | clip_vision_output is empty or invalid | Check your CLIP Vision node; verify input image is loaded |

RuntimeError: Sizes of tensors must match except in dimension 1 | Your style model and conditioning tensors don’t align | Make sure all inputs come from compatible models/components |

📝 Final Notes

- The

Apply Style Modelnode is a powerful enhancement tool—think of it as applying makeup with an airbrush instead of a crayon. - Different style models behave differently, and some are extremely opinionated. Try several before committing to one.

- For best results: Pair high-quality CLIP encodings with thoughtfully designed conditioning and prompts.

- You’ll get more reliable results if you ensure that your CLIP model and style model are aligned (i.e., trained to work together).