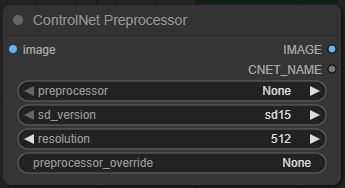

ControlNet Preprocessor

Welcome to the wonderfully temperamental world of ControlNet Preprocessors, where your image dreams live or die by the edges, lines, depth maps, and semantic scribbles this node extracts. The ControlNet Preprocessor node in ComfyUI is your gateway to preparing an image input for ControlNet conditioning — effectively, it translates your raw images into usable maps like Canny, Depth, OpenPose, etc. that ControlNet models can understand and guide generation from.

🧩 Purpose

This node is responsible for:

- Selecting and applying a preprocessing algorithm to an input image.

- Generating a control map (hint: not a pretty picture, but a very useful one).

- Preparing that map for direct use in ControlNet nodes like

Apply ControlNetorControlNetLoader.

Think of this as the translator between your input image and the language your ControlNet model speaks.

⚙️ Node Inputs & Outputs

Inputs

- IMAGE – The image to preprocess. Needs to be in a format that matches standard RGB image data (usually from something like

Load Image,Image Input, or a previous generation).

Outputs

- IMAGE – The output control map, ready to be passed into a

ControlNetApplynode or similar.

🔧 Node Settings (Detailed)

preprocessor

This dropdown lists available preprocessing algorithms. Each preprocessor generates a control map from your input image in a specific format expected by the corresponding ControlNet model.

Common options include:

| Name | Description | Use Case |

|---|---|---|

canny | Applies Canny edge detection. Produces a grayscale edge map. | Outlines and sketchy aesthetics. |

depth | Generates a depth estimation map. | Realistic lighting and 3D-aware outputs. |

depth_leres | Higher-quality monocular depth estimation using LeReS. | Photorealistic results with more accurate spatial depth. |

mlsd | Applies Line Segment Detection. | Architectural renders or structured geometry. |

openpose | Detects human body/keypoints. | Character poses, action shots, motion control. |

scribble | Converts images into scribble-style contours. | Sketch generation, cartoon-like input. |

normal_bae | Surface normal estimation. | Fine texture and lighting-sensitive rendering. |

hed, pidinet, softedge | Edge detection with different neural backends. | Better results in abstract or noisy environments. |

segmentation | Semantic segmentation map. | Scene understanding and targeted prompting. |

🔎 Tip: The preprocessor must match the ControlNet model being used. Don’t feed canny output into a depth ControlNet unless you're into chaos.

Ready to go more in-depth? Check out our detailed preprocessor option resources!

sd_version

Select the version of the Stable Diffusion base model you're using:

1.52.02.1XL(aka SDXL)

💡 Why it matters: ControlNet preprocessors and models are version-dependent. Using the wrong SD version will lead to mismatches between control signals and generation behavior — translation: ugly results or nothing at all.

resolution

Defines the resolution at which the preprocessing is applied. This value controls the size of the generated control map — not your output image.

- Typical Range:

256–1024 - Default: Often

512or768depending on your workflow.

📏 Higher resolution gives more detail in the control map but increases GPU load and can amplify noise or artifacts.

✂️ If your base model is SDXL, 1024 is ideal. For SD 1.5, stick to 512–768 unless you're feeling brave (or your GPU is).

preprocessor_override

An advanced field for custom preprocessor logic or specifying third-party / external preprocessor modules.

- Default: Usually empty.

- What it accepts: Overrides the default behavior of the selected

preprocessor.

🧪 Use this if:

- You're using an external preprocessor script.

- You're trying a forked or experimental ControlNet.

- You like voiding warranties and doing weird things.

🛠 Recommended Use Cases

| Use Case | Preprocessor |

|---|---|

| Pose control | openpose |

| Facial keypoints | face (if available), openpose with face enabled |

| Depth-based realism | depth, depth_leres |

| Background flattening | segmentation |

| Line drawing prompts | canny, mlsd, hed, scribble |

| Structural precision | mlsd, normal_bae |

| Abstract forms | softedge, pidinet |

🧩 Typical Workflow Integration

Here’s how you’d use this node in a standard ControlNet setup:

- 🔁 Input your image via a loader node (

Load Image,Image Input, etc.) - 📐 Pipe it into

ControlNet Preprocessor - 📦 Output from this node goes into

Apply ControlNet→KSamplerfor generation

[Input Image] ↓ [ControlNet Preprocessor] → [Apply ControlNet] → [KSampler]

If you're working with multiple ControlNets, each will usually have its own preprocessor instance.

🚫 What-Not-To-Do-Unless-You-Want-a-Fire

Oh, so you like chaos? You enjoy watching your GPU cry? Great, then here's what not to do with the preprocessor setting unless you're actively trying to summon the AI demons of instability:

❌ Use the wrong preprocessor with the wrong ControlNet model

You wouldn't feed a cat spaghetti and expect it to do math. Likewise, don't feed pose_animal output into a ControlNet trained for depth_midas. The result? Nonsense conditioning, wasted steps, and outputs that look like AI had an existential crisis.

Fix: Always match your preprocessor with its sibling ControlNet (e.g., hed → HED model, depth_anything → ControlNet trained on Depth Anything).

❌ Forget to install dependencies

Half of these preprocessors are built on third-party magic. Missing detectron2, segment-anything, openpose, or opencv? You’ll get red errors, blank images, or worse: success that isn’t actually success.

Fix: Check your install. Use a requirements.txt file. Don’t YOLO this.

❌ Run high-res images through depth_leres or sam on 8GB VRAM

If you're running a potato laptop with a fancy GPU sticker but no actual power, please don’t crank depth_leres or sam to 2048x2048. These models will eat your VRAM and then casually torch your runtime with an out-of-memory error.

Fix: Stay under 1024x1024 unless you’re packing real heat.

❌ Expect perfect outlines from scribble_xdog on low-contrast images

Low contrast images + xdog = muddy soup. It’s not a “dreamlike sketch,” it’s a failed art student’s nightmare.

Fix: Boost your image contrast before applying xdog.

❌ Use shuffle and expect consistency

Shuffle does what it says—it shuffles. It’s not a structured preprocessor, it’s an agent of chaos.

Fix: Don’t use it unless you want variety over control. Never in production workflows. Ever.

❌ Assume pose_dense will get every joint right

If your character is lying down, twisted, or facing away from the camera, pose_dense might just give up entirely. Expect floating limbs and mysterious spaghetti arms.

Fix: Stick with standard pose or dwpose for more stable results. Always validate visually.

❌ Mix multiple preprocessors on the same conditioning channel

Unless your ControlNet expects a specific composite input (and you really know what you're doing), mixing outputs like depth + canny into the same ControlNet model is like throwing oil and water into a blender—loud, messy, and completely ineffective.

Fix: One preprocessor, one ControlNet, per channel. Keep your chaos modular.

❌ Skip normalization when using normalmap_*

Feeding an unnormalized or overly bright image into a normalmap extractor? Get ready for washed-out normals or weird lighting shadows.

Fix: Preprocess with tone mapping or exposure correction first.

❌ Rely on seg_* for precision mask work

Semantic segmentation ≠ accurate masking. These models often blur edges or clip object boundaries. Don’t use them if you're trying to do surgical precision work like inpainting hair strands.

Fix: Use sam instead. It’s designed for precision.

❌ Forget that more preprocessing ≠ better results

Yes, we know—it’s tempting to run every image through five preprocessors, load five ControlNets, and see what happens. But you’ll probably just get noise, hallucinations, or broken anatomy.

Fix: Be deliberate. Preprocessors are tools, not spice blends. Pick the one that suits your task, and leave the rest out of your stew.

And finally:

🔥 Don’t forget to laugh when it breaks

This is ComfyUI. If something goes wrong and you get AI soup or a melted mannequin, remember: it’s not a bug, it’s a rite of passage.

🧱 Known Issues

- Compatibility Hell: Some third-party ControlNet models expect specific preprocessors. Always check the model description.

- OpenPose & Depth tend to produce black outputs on faulty or low-contrast inputs. Try normalizing the image or adjusting lighting.

- Preprocessor-Override crashes? Likely due to an invalid import, path issue, or incompatible Python logic.

🔚 Summary

| Setting | Description | Important Notes |

|---|---|---|

preprocessor | Algorithm used to extract control data | Must match ControlNet type |

sd_version | Specifies Stable Diffusion version | Required to align tensors |

resolution | Size of the control map | Higher = more detail, more VRAM |

preprocessor_override | Advanced override | For custom pipelines or chaos monkeys |

This node is the backbone of structured, repeatable generation with ControlNet. Master it, and you’ll go from "kinda random" to "surgical precision" in your generations.